Ethical Considerations of AI in Healthcare

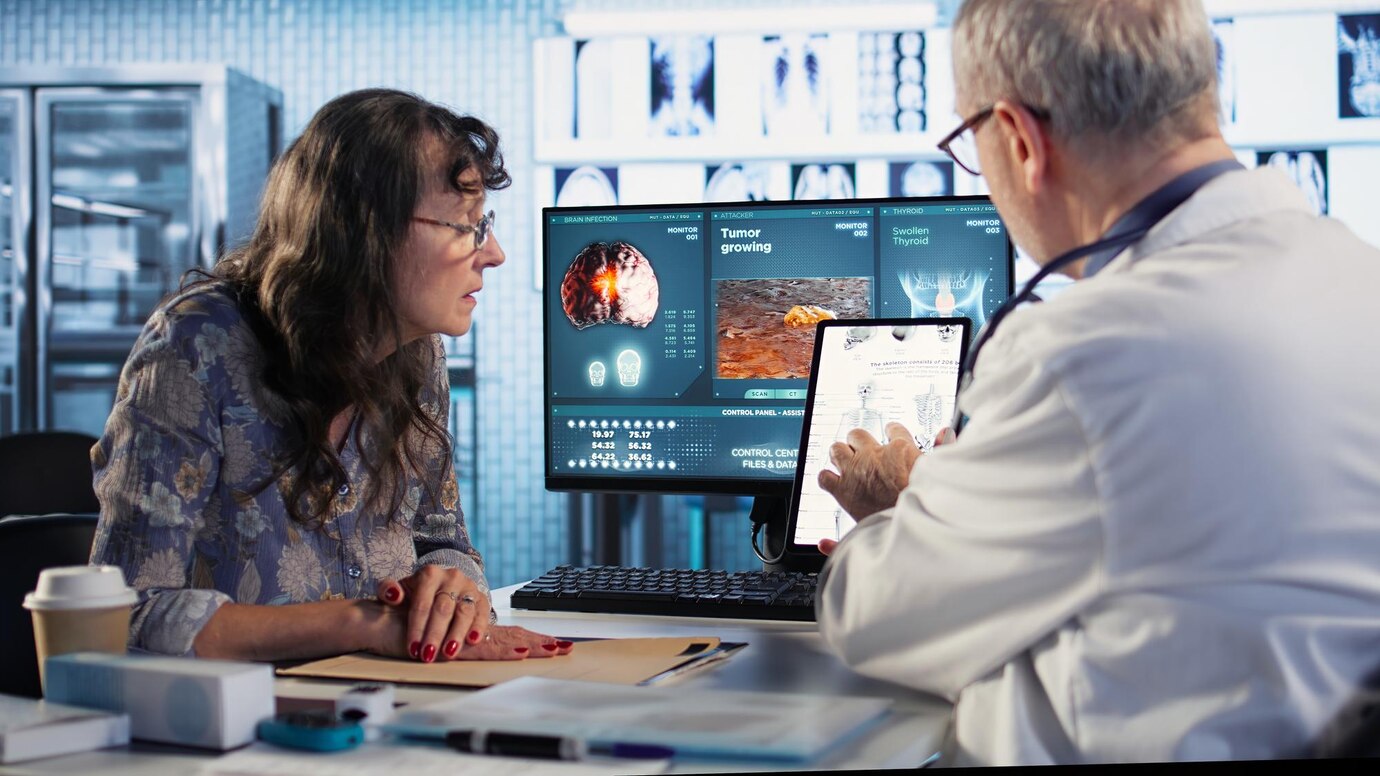

The integration of artificial intelligence into healthcare systems offers promising advancements, from improved diagnostics to personalized treatment plans. Yet, as these technologies become increasingly prevalent, they also raise significant ethical questions. Ensuring that AI is employed responsibly within healthcare is crucial to protect patient welfare, uphold professional integrity, and maintain public trust. Ethical deliberations in this field must address not only technological efficiency but also the broader impacts on individuals and communities. In examining the ethical landscape of AI in healthcare, it is vital to consider fundamental principles such as privacy, fairness, transparency, and accountability to guide the development and implementation of AI solutions.